When designing efficient software systems, especially those that handle large datasets or require high-speed data access, mmap emerges as a powerful tool. In this blog post, we’ll explore everything you need to know about mmap: its uses, benefits, limitations, and why it’s a top choice for event-driven architectures like EventSource. Whether you’re a seasoned developer or diving into advanced software architecture, this guide will be invaluable.

What is mmap?

mmap (memory-mapped file I/O) is a mechanism that maps files or devices into memory. Instead of traditional read and write operations, mmap allows applications to directly access the contents of a file as if it were part of the process’s memory space.

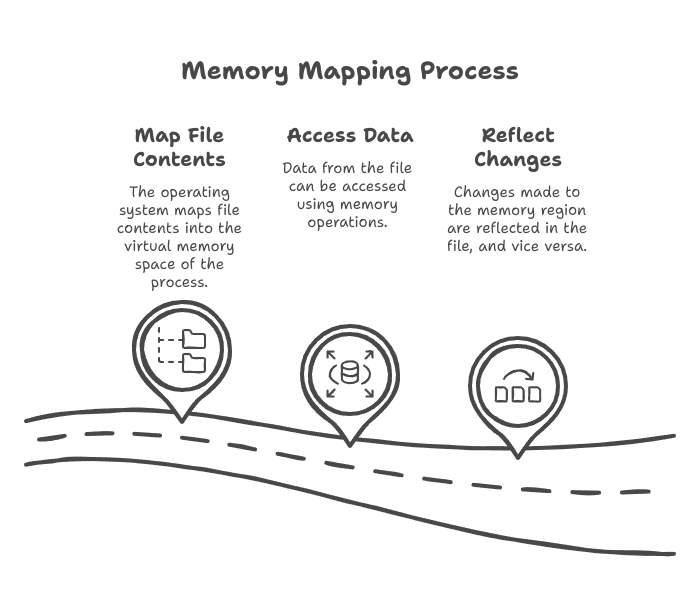

How mmap Works

When a file is memory-mapped:

- The operating system maps file contents into the virtual memory space of the process.

- Data from the file can be accessed using memory operations.

- Changes made to the memory region are reflected in the file, and vice versa (depending on the mode used).

Here’s an example in Python:

import mmap

# Open a file and memory-map it

with open('example.txt', 'r+b') as f:

with mmap.mmap(f.fileno(), length=0, access=mmap.ACCESS_WRITE) as mm:

print(mm[:]) # Read contents

mm[0:5] = b'Hello' # Modify contents

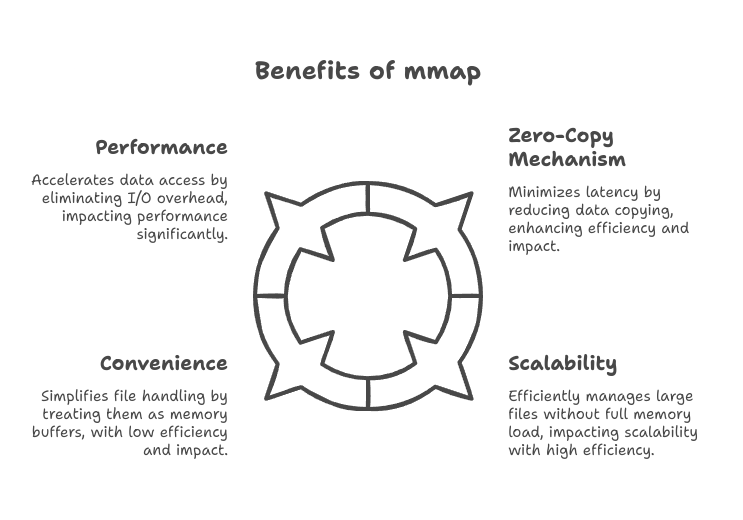

Benefits of mmap

- Performance: Eliminates the overhead of repeated I/O system calls, enabling faster data access.

- Zero-Copy Mechanism: Reduces data copying between kernel and user space, minimizing latency.

- Scalability: Efficiently handles large files without loading them entirely into memory.

- Convenience: Simplifies working with files by treating them as memory buffers.

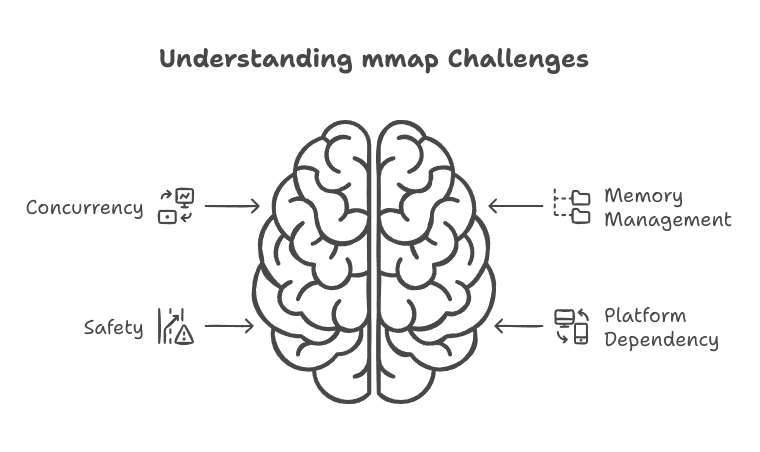

Common Issues and Considerations

- Concurrency:

- When multiple processes map the same file, changes made by one process can affect others.

- Use file locking mechanisms or synchronize access to avoid race conditions.

- Memory Management:

- Mapping very large files can exhaust virtual memory, even if physical memory is available.

- Safety:

- Segmentation faults can occur if you attempt to access memory beyond the mapped region.

- Proper error handling is essential to ensure stability.

- Platform Dependency:

- While mmap is widely supported, behavior can vary across operating systems.

Operating System Support

Operating systems provide extensive support for mmap, making it a highly portable tool. Key features include:

- Lazy Loading: Most OSes load file data into memory only when accessed.

- Page Fault Handling: The OS handles loading and swapping pages between memory and disk efficiently.

- File Synchronization: Updates can be automatically synchronized with the file (or deferred for performance).

For Linux, you can use the mmap system call directly in C:

#include <fcntl.h>

#include <sys/mman.h>

#include <unistd.h>

int main() {

int fd = open("example.txt", O_RDWR);

char *map = mmap(NULL, 4096, PROT_READ | PROT_WRITE, MAP_SHARED, fd, 0);

if (map == MAP_FAILED) {

perror("mmap failed");

return 1;

}

printf("Data: %s\n", map);

map[0] = 'H';

munmap(map, 4096);

close(fd);

return 0;

}

In Java, you can use the FileChannel and MappedByteBuffer classes to achieve similar functionality:

import java.io.RandomAccessFile;

import java.nio.MappedByteBuffer;

import java.nio.channels.FileChannel;

public class MemoryMappedFileExample {

public static void main(String[] args) {

try (RandomAccessFile file = new RandomAccessFile("example.txt", "rw")) {

FileChannel channel = file.getChannel();

// Map the file into memory

MappedByteBuffer buffer = channel.map(FileChannel.MapMode.READ_WRITE, 0, channel.size());

// Read content

for (int i = 0; i < buffer.limit(); i++) {

System.out.print((char) buffer.get(i));

}

// Modify content

buffer.put(0, (byte) 'H');

System.out.println("\nFile updated successfully.");

} catch (Exception e) {

e.printStackTrace();

}

}

}

Use Cases for mmap

- Database Systems:

- Databases often use mmap for caching and managing large datasets.

- Example: PostgreSQL employs mmap for its shared memory implementation.

- Log Processing:

- Access and process large log files without loading them entirely into memory.

- EventSource Architectures:

- Efficiently manage event streams and state by mapping log files or streams into memory.

- Machine Learning:

- Memory-map large datasets for efficient training without exceeding physical memory limits.

Why mmap is Perfect for EventSource

EventSource architectures rely heavily on efficient event logs to process and replay events. Here’s why mmap shines in this context:

- High Throughput: Memory-mapped files enable rapid access and updates to logs, crucial for event processing.

- Durability: With appropriate synchronization, mmap ensures logs are consistently written to disk.

- Real-Time Performance: Eliminates the latency of traditional I/O, enabling near-instantaneous event streaming.

- Scalability: Handles large event logs without overwhelming memory, supporting systems with high event volumes.

Best Practices

- Error Handling:

- Always check for errors during mapping and unmapping.

- Resource Management:

- Use

munmapto release memory when it’s no longer needed.

- Use

- Synchronization:

- Use

msyncfor explicit synchronization when necessary.

- Use

- Profiling and Testing:

- Profile memory usage and performance to ensure mmap meets system requirements.

Conclusion

mmap is a robust tool in the software architect’s arsenal. Its ability to efficiently manage large files, combined with its performance benefits, makes it ideal for high-performance applications. While it has challenges, careful design and implementation can unlock mmap’s full potential. For EventSource architectures, mmap’s real-time capabilities and durability make it an unparalleled choice.